Computational Statistics

Lecture 5

Yixuan Qiu

2023-10-11

Numerical Linear Algebra

Today's Topics

Singular value decomposition

Sparse matrices

Case study: regression and PCA for sparse data

Singular Value Decomposition

Singular Value Decomposition

Singular value decomposition (SVD) can be viewed as an extension to the eigenvalue decomposition of symmetric matrices

SVD applies to general rectangular matrices

Wide applications in dimension reduction, image compression, recommender system, etc.

SVD Theorem [2]

Every matrix A∈Rm×n of rank r can be decomposed as

A=UΣV′=(U1U2)(Σ1000)(V′1V′2),

where Um×m=(u1,…,um) and Vn×n=(v1,…,vn) are orthogonal matrices, U1∈Rm×r, V1∈Rn×r, and Σ1=diag(σ1,…,σr) is a nonnegative diagonal matrix.

σ1≥⋯≥σr>0 are called singular values of A. ui,i=1,…,m and vj,j=1,…,n are called left and right singular vectors of A, respectively.

Compact SVD

According to the factorization theorem, we can also write A=U1Σ1V′1, where A is m×n, U1 is m×r, Σ1 is r×r, and V1 is n×r.

U1 and V1 are column-orthonormal matrices.

This is typically called the compact SVD.

Relation to Eigenvalues

Suppose we have the (compact) SVD A=UΣV′, then

A′A=VΣU′UΣV′=VΣ2V′

AA′=UΣV′VΣU′=UΣ2U′

Singular values of A are square roots of positive eigenvalues of A′A and AA′

For generality, we usually also allow singular values to be zero, by extending the U, Σ, and V matrices to proper dimensions.

Partial SVD

If we only extract the largest k singular values and associated singular vectors of A, we call the result U(k)Σ(k)V′(k) the partial SVD of A.

Σ(k)=diag(σ1,…,σk), σ1≥⋯≥σk

U(k)=(u1,…,uk)

V(k)=(v1,…,vk)

Relation to Low-rank Approximation

Partial SVD is useful due to an interesting theorem.

Suppose that a matrix Am×n, m≥n has SVD A=UΣV′, where Σ=diag(σ1,…,σn) and σ1≥⋯≥σn≥0. Then the best rank-k approximation to A in the Frobenius norm is given by

Ak=k∑i=1σiuiv′i.

The claim also holds with the operator norm.

SVD Computation

SVD Computation

Similar to eigenvalue computation, there are different use cases of SVD:

Computing all singular values (full SVD)

Computing the largest k singular values (partial SVD)

It is not common to request the smallest singular values.

Computing Full SVD

Computing Full SVD

Similar to full eigenvalue decomposition, computing full SVD also typically has two steps:

Looking for orthogonal matrices U1 and V1 such that U′1AV1 is bidiagonal U′1AV1=[B0],B=⎡⎢ ⎢ ⎢ ⎢ ⎢ ⎢ ⎢ ⎢⎣ρ1θ2ρ2θ3⋱⋱ρn−1θnρn⎤⎥ ⎥ ⎥ ⎥ ⎥ ⎥ ⎥ ⎥⎦

Applying the QR algorithm to the matrix B to compute all singular values

The Golub-Kahan Householder (Bidiagonalization) Algorithm [2]

Any Am×n with m≥n can be reduced to U′1AV1=[B0] by orthogonal matrices U1∈Rm×m and V1∈Rn×n. U1 and V1 are product of simple orthogonal matrices:

- U1=Q1Q2⋯Qn

- V1=P0P1⋯Pn−2

The singular values of B are the same as those of A.

The QR Algorithm

Since singular values of B are square roots of eigenvalues of B′B, the QR algorithm can proceed as follows:

Compute the QR decomposition: B(k)′B(k)=QkRk

Update B(k) to B(k+1) such that B(k+1)′B(k+1)=RkQk=Q′kB(k)′B(k)Qk

B(k) is bidiagonal for all k.

The QR decomposition and the update of B(k) would use the special structure of B(k), so B(k)′B(k) will not be explicitly formed.

Advanced Algorithms

In practice, there are many improvements made to this basic method

A good summary of those advanced algorithms can be found at https://www.cs.utexas.edu/users/inderjit /public_papers/HLA_SVD.pdf

Computing Partial SVD

Computing Partial SVD

Partial SVD seeks the largest k singular values of a matrix Am×n

Can be reduced to computing the largest k eigenvalues of A′A (if m≥n) or AA′ (if m<n)

Using the power method or other iterative methods, and assuming m≥n, we need to realize the operation v→A′Av

Be cautious of the computing order! You should compute x←Av first and then do y←A′x

Advanced Algorithms

Another algorithm to compute partial SVD is the augmented implicitly restarted Lanczos bidiagonalization method [4]

It works on the original matrix A instead of A′A

Potentially more numerical stable than computing the eigenvalues of A′A

Implementation

- Simply call

svd()for full (but compact) SVD - You can specify how many left/right singular vectors to return

set.seed(123)n = 1000p = 500x = matrix(rnorm(n * p), n, p)str(svd(x))## List of 3## $ d: num [1:500] 53.6 53.3 53.3 53 52.6 ...## $ u: num [1:1000, 1:500] 0.00265 -0.03029 0.00451 -0.00763 -0.0034 ...## $ v: num [1:500, 1:500] 0.01699 0.00386 0.00971 -0.01931 0.01455 ...str(svd(x, nu = 10, nv = 10))## List of 3## $ d: num [1:500] 53.6 53.3 53.3 53 52.6 ...## $ u: num [1:1000, 1:10] 0.00265 -0.03029 0.00451 -0.00763 -0.0034 ...## $ v: num [1:500, 1:10] 0.01699 0.00386 0.00971 -0.01931 0.01455 ...Benchmark

- Use

nu = 0andnv = 0if you do not need singular vectors

library(dplyr)bench::mark( svd(x), svd(x, nu = 100, nv = 100), svd(x, nu = 1, nv = 1), svd(x, nu = 0, nv = 0), min_iterations = 3, max_iterations = 10, check = FALSE) %>% select(expression, min, median)## # A tibble: 4 × 3## expression min median## <bch:expr> <bch:tm> <bch:tm>## 1 svd(x) 785ms 795ms## 2 svd(x, nu = 100, nv = 100) 784ms 786ms## 3 svd(x, nu = 1, nv = 1) 786ms 788ms## 4 svd(x, nu = 0, nv = 0) 272ms 275msImplementation

- There are various options for partial SVD

irlba::irlbaRSpectra::svdssvd::propack.svd,svd::trlan.svd, andsvd::ztrlan.svd

res1 = irlba::irlba(x, 10)res1$d## [1] 53.56025 53.30589 53.25349 53.03145 52.56782 52.30170 52.13693 51.85140## [9] 51.64052 51.42326res2 = RSpectra::svds(x, k = 10)res2$d## [1] 53.56025 53.30589 53.25349 53.03145 52.56782 52.30170 52.13693 51.85140## [9] 51.64052 51.42326Implementation

res3 = svd::propack.svd(x, neig = 10)res3$d## [1] 53.56025 53.30589 53.25349 53.03145 52.56782 52.30170 52.13693 51.85140## [9] 51.64052 51.42326res4 = svd::trlan.svd(x, neig = 10)res4$d## [1] 53.56025 53.30589 53.25349 53.03145 52.56782 52.30170 52.13693 51.85140## [9] 51.64052 51.42326res5 = svd::ztrlan.svd(x, neig = 10)res5$d## [1] 53.56025 53.30589 53.25349 53.03145 52.56782 52.30170 52.13693 51.85140## [9] 51.64052 51.42326Benchmark

irlba::irlba,RSpectra::svds, andsvd::propack.svdare roughly at the same level

bench::mark( irlba::irlba(x, 10, tol = 1e-8), RSpectra::svds(x, k = 10, opts = list(tol = 1e-8)), svd::propack.svd(x, neig = 10, opts = list(tol = 1e-8)), svd::trlan.svd(x, neig = 10, opts = list(tol = 1e-8)), svd::ztrlan.svd(x, neig = 10, opts = list(tol = 1e-8)), min_iterations = 10, max_iterations = 10, check = FALSE) %>% select(expression, min, median)## # A tibble: 5 × 3## expression min median## <bch:expr> <bch:tm> <bch:tm>## 1 irlba::irlba(x, 10, tol = 1e-08) 97.7ms 109.8ms## 2 RSpectra::svds(x, k = 10, opts = list(tol = 1e-08)) 88.4ms 89.8ms## 3 svd::propack.svd(x, neig = 10, opts = list(tol = 1e-08)) 99.2ms 102.3ms## 4 svd::trlan.svd(x, neig = 10, opts = list(tol = 1e-08)) 241.6ms 248.7ms## 5 svd::ztrlan.svd(x, neig = 10, opts = list(tol = 1e-08)) 400.8ms 406.1msImplementation

- We will see how partial SVD can be used to efficiently compute PCA of sparse data

Sparse Matrix

Sparse Matrix

A matrix that has very few nonzero elements.

- Mathematically, not very different from dense matrices

- But sparsity can make a huge impact on computing

Problems

We are mostly interested in the following problems:

How to store sparse data/matrices

How to implement basic matrix operations on sparse matrices

How to do statistical computing on sparse matrices

Storage

Two major storage formats:

Coordinate (COO) format

Compressed sparse column (CSC) format

COO Format

Simplest storage scheme

Row indices, column indices, nonzero elements

A=⎡⎢ ⎢ ⎢ ⎢ ⎢ ⎢⎣1000030050007890021000000⎤⎥ ⎥ ⎥ ⎥ ⎥ ⎥⎦

i=[12342343]j=[11334445]x=[13725819]

COO Format

For an m×n matrix with nnz nonzero elements

COO format needs to store 3⋅nnz numbers

However, the j vector contains redundant information

The CSC format is a more economic scheme to represent sparse matrices

CSC Format

The x vector stores elements in A column by column

Use a "pointer vector" p, where pj+1−pj is the number of nonzero elements in column j

A=⎡⎢ ⎢ ⎢ ⎢ ⎢ ⎢⎣1000030050007890021000000⎤⎥ ⎥ ⎥ ⎥ ⎥ ⎥⎦

i=[12342343]j=[11334445]p=[022478]x=[13725819]

CSC Format

The CSC format only needs to store the i, p, and x vectors

2⋅nnz+n+1 numbers in total

CSC Format

The CSC format only needs to store the i, p, and x vectors

2⋅nnz+n+1 numbers in total

Question: If we also know that the sparse matrix is binary, i.e., every element is either 0 or 1, then what is a good scheme for storage?

Basic Operations

Elementwise operations are relatively simple

One of the most important operations for linear systems and eigenvalue computation is the matrix-vector multiplication

v→Av and/or v→A′v

Computing A′v

The key point is to extract each column of A

Each column can be viewed as a sparse vector

A=⎡⎢ ⎢ ⎢ ⎢ ⎢ ⎢⎣1000030050007890021000000⎤⎥ ⎥ ⎥ ⎥ ⎥ ⎥⎦=[a1a2a3a4a5]

A′v=[a′1va′2va′3va′4va′5v]′

Extract aj

- aj has (pj+1−pj) nonzero elements

- The (pj+1)-th element of x is the first nonzero element of aj

- The (pj+1)-th element of i is the location of this element in the sparse vector

- The (pj+2)-th element of x is the second nonzero element of aj

- The (pj+2)-th element of i is the location of this element in the sparse vector

- ...

Extract aj

A=⎡⎢ ⎢ ⎢ ⎢ ⎢ ⎢⎣1000030050007890021000000⎤⎥ ⎥ ⎥ ⎥ ⎥ ⎥⎦

i=[12342343]p=[022478]x=[13725819]

For example, to get the third column, a3

p4−p3=2, meaning a3 has two nonzero elements

p3+1=3, x3=7, i3=3

p3+2=4, x4=2, i4=4

a3 can be expressed as {3→7,4→2}

Computing A′v

Similarly, we can get a1={1→1,2→3}a2={}a3={3→7,4→2}a4={2→5,3→8,4→1}a5={3→9}

Then the inner products only involve nonzero elements a′1v=1⋅v1+3⋅v2a′2v=0a′3v=7⋅v3+2⋅v4⋯

Computing Av

- We can again use the sparse vectors aj to compute Av

A=⎡⎢ ⎢ ⎢ ⎢ ⎢ ⎢⎣1000030050007890021000000⎤⎥ ⎥ ⎥ ⎥ ⎥ ⎥⎦=[a1a2a3a4a5]

Av=v1a1+⋯+v5a5

- Each vjaj is still a sparse vector formed by multiplying each nonzero element of aj by vj

Computing Av

Therefore, computing Av reduces to:

Initialize r←0m

For j=1,…,n do r←r+vjaj

For example, a3={3→7,4→2}

Then r←r+v3a3 expands to

r3←r3+7⋅v3r4←r4+2⋅v4

Computational Complexity

In general, for matrix Am×n with nnz nonzero elements

The complexity of v→Av and v→A′v are both O(nnz)

This makes many iterative methods very efficient on sparse matrices

Implementation

- The Matrix R package has nice support for different kinds of sparse matrices

- A simple way to construct sparse matrices is to create from an

(i, j, x)triplet (COO format) - The result is typically in CSC format (

dgCMatrix)

library(Matrix)i = c(1, 2, 3, 4, 2, 3, 4, 3)j = c(1, 1, 3, 3, 4, 4, 4, 5)x = c(1, 3, 7, 2, 5, 8, 1, 9)xsp = sparseMatrix(i = i, j = j, x = x, dims = c(5, 5))xsp## 5 x 5 sparse Matrix of class "dgCMatrix"## ## [1,] 1 . . . .## [2,] 3 . . 5 .## [3,] . . 7 8 9## [4,] . . 2 1 .## [5,] . . . . .Implementation

- Note that internally, the i vector uses 0-based indices

xsp## 5 x 5 sparse Matrix of class "dgCMatrix"## ## [1,] 1 . . . .## [2,] 3 . . 5 .## [3,] . . 7 8 9## [4,] . . 2 1 .## [5,] . . . . .str(xsp)## Formal class 'dgCMatrix' [package "Matrix"] with 6 slots## ..@ i : int [1:8] 0 1 2 3 1 2 3 2## ..@ p : int [1:6] 0 2 2 4 7 8## ..@ Dim : int [1:2] 5 5## ..@ Dimnames:List of 2## .. ..$ : NULL## .. ..$ : NULL## ..@ x : num [1:8] 1 3 7 2 5 8 1 9## ..@ factors : list()Implementation

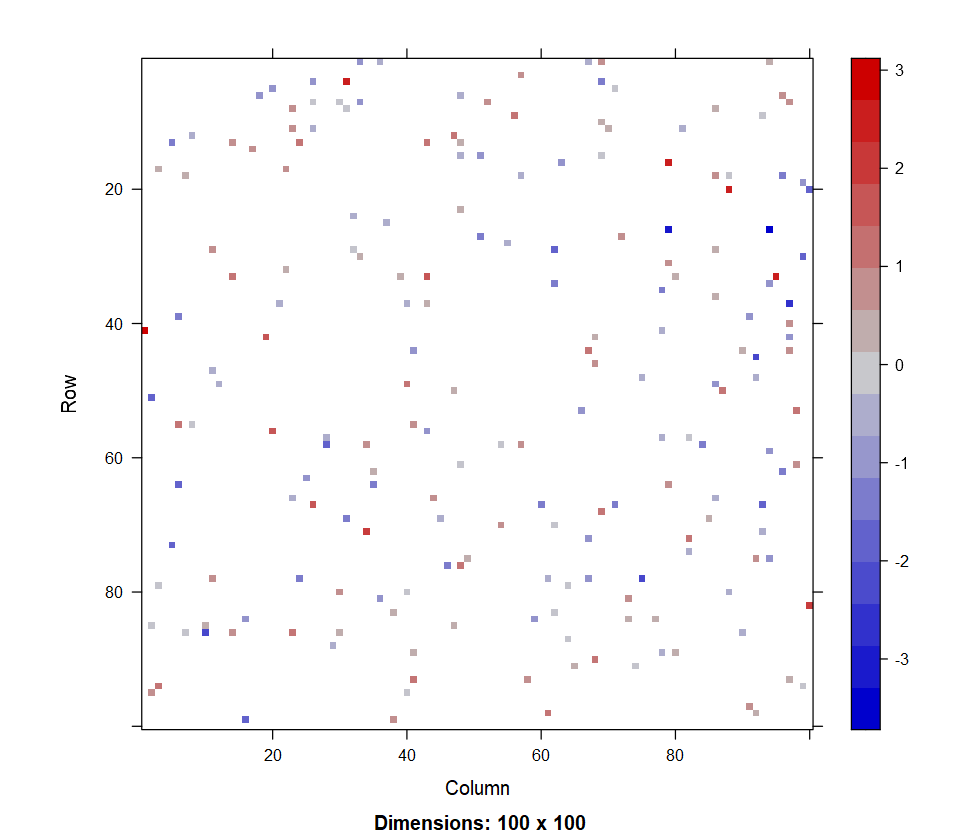

- Another way to construct sparse matrices is to convert from dense matrices

set.seed(123)n = 1000nnz = floor(0.01 * n^2)xdata = numeric(n^2)xdata[sample(n^2, nnz)] = rnorm(nnz)x = matrix(xdata, n, n)class(x)## [1] "matrix" "array"xsp = as(x, "sparseMatrix")class(xsp)## [1] "dgCMatrix"## attr(,"package")## [1] "Matrix"Benchmark

- Matrix multiplication operations are already defined for sparse matrices

bench::mark( x %*% x, crossprod(x), xsp %*% xsp, crossprod(xsp), min_iterations = 10, max_iterations = 10, check = FALSE) %>% select(expression, min, median)## # A tibble: 4 × 3## expression min median## <bch:expr> <bch:tm> <bch:tm>## 1 x %*% x 488.19ms 493.53ms## 2 crossprod(x) 482.8ms 493.61ms## 3 xsp %*% xsp 1.3ms 1.53ms## 4 crossprod(xsp) 1.48ms 1.58msSparse Matrix Computation

Direct Methods

Direct methods for sparse matrices are typically difficult problems

Preservation of sparsity is a big challenge

If A is sparse, A=LU, L and U are not necessarily sparse

Therefore, proper ordering of rows/columns of A is critical

With some permutation matrices P and Q, PAQ=~L~U, ~L and ~U may be much more sparse than L and U

Direct Methods

There are indeed sparse decomposition methods, e.g.,

Sparse LU decomposition

Sparse Cholesky decomposition

They are typically very sophisticated

Software support such as SuiteSparse

Iterative Methods

We are mostly interested in iterative methods for sparse matrices

Small memory cost

Computationally efficient

Easy to implement

Core Idea

Iterative methods that rely on v→Av, v→A′v, or some other minor operations are readily available for sparse matrices

Conjugate gradient method

Iterative eigenvalue algorithms such as power method

Partial SVD

Case Studies

Statistical Computing on Sparse Matrix

Regression

Ridge regression

PCA

Lasso (preview)

Regression on Sparse Data

Suppose the data matrix X is sparse

Target is ^β=(X′X)−1X′Y

However, X′X may no longer be sparse

Also, X typically does not have a "specialized" QR decomposition

Therefore, there is no obvious benefit of the sparsity (except for computing X′X)

Regression on Sparse Data

In contrast, CG can effectively utilize the sparsity

CG solves Ax=b, where A=X′X and b=X′Y

A is never explictly formed

CG requires the operation v→Av=X′Xv

Compute two sparse matrix-vector multiplications

u←Xv

w←X′u

Implementation

- First simulate sparse data and use the dense solver to get the result

library(Matrix)set.seed(123)n = 10000p = 500xsp = rsparsematrix(n, p, density = 0.001)x = as.matrix(xsp)y = rnorm(n)bhat = solve(crossprod(x), crossprod(x, y))bhat[1:5]## [1] -0.003835525 -0.211645950 0.102156056 0.294562285 0.031367565Implementation

- Slight modification of the CG code

reg_cg = function(Xsp, y, x0 = rep(0, ncol(Xsp)), eps = 1e-6){ b = as.numeric(crossprod(Xsp, y)) x = x0 p = r = b - as.numeric(crossprod(Xsp, Xsp %*% x)) r2 = sum(r^2) errs = c() for(i in seq_along(b)) { Ap = as.numeric(crossprod(Xsp, Xsp %*% p)) alpha = r2 / sum(p * Ap) x = x + alpha * p r = r - alpha * Ap r2_new = sum(r^2) err = sqrt(r2_new) errs = c(errs, err) if(err < eps) break beta = r2_new / r2 p = r + beta * p r2 = r2_new } list(beta_hat = x, errs = errs, niter = i)}Implementation

- Verify the result

cg = reg_cg(xsp, y, eps = 1e-6)bhat_cg = cg$beta_hatcg$niter## [1] 56bhat_cg[1:5]## [1] -0.003835524 -0.211645950 0.102156055 0.294562283 0.031367563max(abs(bhat_cg - bhat))## [1] 1.548608e-07Benchmark

- Significant speedup over dense operations

bench::mark( solve(crossprod(x), crossprod(x, y)), reg_cg(xsp, y), min_iterations = 3, max_iterations = 10, check = FALSE) %>% select(expression, min, median)## # A tibble: 2 × 3## expression min median## <bch:expr> <bch:tm> <bch:tm>## 1 solve(crossprod(x), crossprod(x, y)) 1.37s 1.37s## 2 reg_cg(xsp, y) 3.83ms 5.06msRidge Regression on Sparse Data

Ridge regression is similar, but now A=X′X+λI and b=X′Y

Again, A should not be explictly formed

Now we need the operation v→Av=(X′X+λI)v

Compute two sparse matrix-vector multiplications and one vector addition

u←Xv

w←X′u

z←w+λv

Excercises

Implement the ridge regression for a sparse X with n=10000 and p=500

What if p≫n? For example, X is a sparse matrix with n=500 and p=10000

We can find that the majority part of the CG code is unchanged in different problems. Design an implementation of CG that is as general as possible. Hint: pass a function

Axas the first argument of CG, whereAxhas the signatureAx = function(x, args) {...}Axshould implement the operation v→Av, andargscontains information about A.

PCA on Sparse Data

Another common task for large data is computing PCA

Equivalent to computing the largest k eigenvalues of the sample covariance matrix

An obvious way is to first compute S=1n−1n∑i=1(xi⋅−¯x)(xi⋅−¯x)′, where xi⋅∈Rp is the i-th observation, and ¯x∈Rp is the sample mean vector

Then compute eigenvalues of S

PCA on Sparse Data

However, this does not fully utilize the sparsity

In fact, S is generally a dense matrix

A better scheme is to observe that S=1n−1(X−1n¯x′)′(X−1n¯x′), where X is the data matrix, and 1n is a vector of ones

Ignoring the (n−1)−1 factor, we only need to compute the eigenvalues of A′A, where A=X−1n¯x′

Also equivalent to the partial SVD of A

PCA on Sparse Data

Then we need to implement the operations v→Av and v→A′v

As a first step, we need to compute ¯x. This is relatively easy since we have shown how to extract columns of X

Then to do v→Av=(X−1n¯x′)v:

u←Xv

s←¯x′v

w←u−s1n

PCA on Sparse Data

Similarly, to do v→A′v=(X−1n¯x′)′v:

u←X′v

s←1′v

w←u−s¯x

Implementation and Benchmark

See https://statr.me/2019/11/rspectra-center-scale/ for more details

Especially the

centerandscaleparameters in theRSpectra::svdsfunction

References

[1] Grégoire Allaire and Sidi Mahmoud Kaber (2008). Numerical linear algebra. Springer.

[2] Åke Björck (2015). Numerical methods in matrix computations. Springer.

[3] Gene H. Golub and William Kahan (1965). Calculating the singular values and pseudoinverse of a matrix. Journal of SIAM: Series B, Numerical Analysis, 205–224.

[4] James Baglama and Lothar Reichel (2005). Augmented implicitly restarted Lanczos bidiagonalization methods. SIAM Journal on Scientific Computing, 27(1), 19-42.

[5] Iain S. Duff, Albert M. Erisman, and John K. Reid (2017). Direct methods for sparse matrices. Oxford University Press.

[6] Yousef Saad (2003). Iterative methods for sparse linear systems. Society for Industrial and Applied Mathematics.